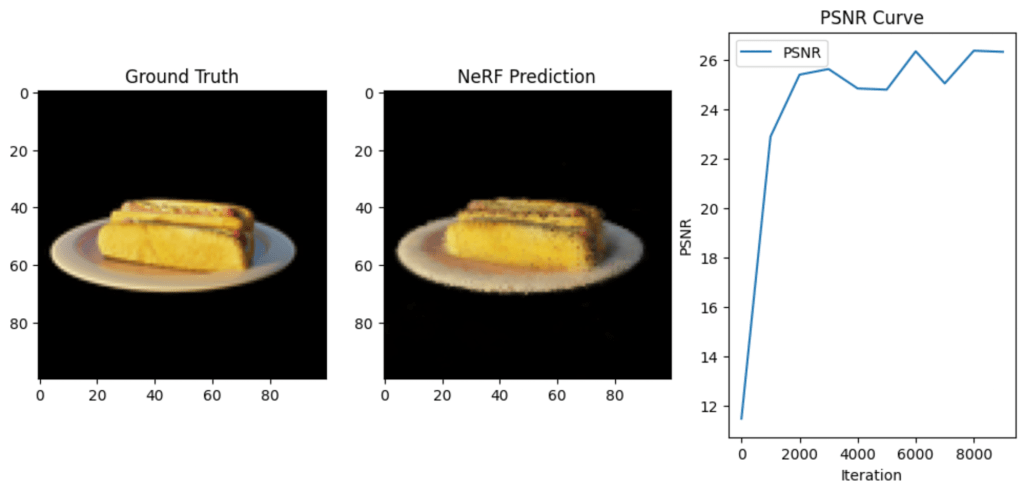

In this project, I implemented a NeRF-based volumetric rendering pipeline using PyTorch, OpenGL, and GLSL, training a neural network to reconstruct a 3D scene from a dataset of 2D images. The final result? A real-time NeRF renderer capable of generating smooth, realistic views from arbitrary camera angles.

Instead of storing a scene as a 3D mesh or point cloud, NeRF learns a function that maps spatial coordinates (x, y, z) and viewing direction to color (RGB) and density (sigma). This function is represented as a multi-layer perceptron (MLP), which I trained in PyTorch.

Once the MLP is trained, we render images by simulating light traveling through the scene. This involves:

1. Generating camera rays for each pixel using intrinsic parameters (focal length, camera pose).

2. Sampling points along each ray and querying the MLP for their color and density.

3. Applying the volume rendering equation to accumulate color along the ray, producing a final image.

NeRFs are typically slow because they require evaluating a deep network for every pixel. To make it real-time, I converted the trained MLP into GLSL by hardcoding weights as matrix multiplications. I then implemented ray marching in an OpenGL fragment shader for interactive visualization.

This project was an exciting deep dive into neural rendering, graphics programming, and high-performance computing. The combination of machine learning and real-time graphics opens the door to more advanced applications, from VR and gaming to robotics and autonomous vision systems.

Up next, I hope to explore Gaussian splatting for even faster 3D representations of 2D images.

Leave a comment